Justin Sullivan/Getty Images News

Nvidia Corporation’s (NASDAQ:NVDA) data center segment has overtaken its Gaming segment to become its largest segment, in its Q1 FY2023, growing robustly by 83% YoY. Based on the company’s breakdown of its data center business across 6 data center classes, we examined its product offering that caters to these customers and determined the outlook of its data center business segment as a whole.

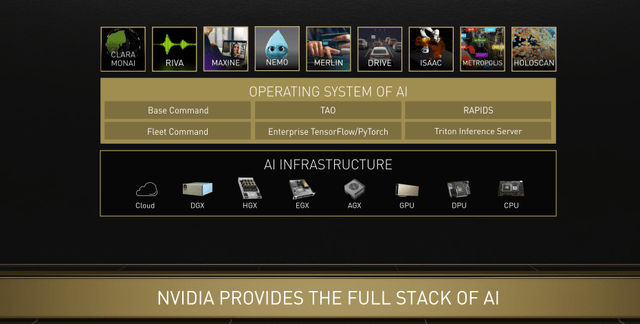

Moreover, we looked into the company’s product offerings of its GPUs and software to offer the full stack for data centers and how it is integrating AI and software functionalities to build on its data center leadership.

As it recently introduced its Arm CPU products for data centers, we analyzed the Arm CPU market and the players within, and projected its share vs x86 processors. Based on this, we estimated the market opportunity for Nvidia and its revenue growth.

Dominating Data Centers Across All 6 Classes

Nvidia’s data center segment has become its largest segment accounting for 45% of revenues in Q1 FY2023 and had the highest growth CAGR of 73.8% in the past 5 years. Its computing platform consists of hardware and software such as GPUs, DPUs, interconnects and systems, CUDA programming model and software libraries. According to Nvidia’s CEO, the company listed 6 types of data center classes: supercomputing centers, enterprise computing data centers, hyperscalers, cloud computing and two new classes which are FactoryAI and edge data centers. In the table below, we compiled the different data center classes by their market sizes, forecast CAGR, location, applications, users, relative compute power and footprint.

|

Data Center |

Market Size ($ bln) |

Market Forecast CAGR |

Computer Power |

Location |

Footprint (‘size’) |

Types of Users/ Operators |

Applications |

|

Supercomputing Data Center |

6.5 |

16.2% |

Very High |

Self-operated |

Large |

Governments, aerospace, petroleum, and automotive industries |

HPC, quantum mechanics, weather forecasting, oil and gas exploration, molecular modeling, physical simulations, aerodynamics, nuclear fusion research |

|

Hyperscale Data Center |

32.2 |

14.9% |

High |

Self-operated |

Very Large |

Large multinational companies, cloud service providers |

Colocation, cryptography, genome processing, and 3D rendering |

|

Enterprise Data Center |

84.2 |

12.0% |

Low |

Self-operated |

Medium |

Enterprises (Various industries) |

Company networks and systems (Various industries) |

|

Cloud Computing Data Center |

358.8 |

16.4% |

High |

Third-party |

Very Large |

Cloud service providers |

Cloud-native application development, storage (IaaS), streaming, data analytics |

|

Edge Data Center |

7.9 |

17.0% |

Medium |

Third-party |

Medium |

Edge Data Center Companies, Telco, Healthcare |

5G, AV, Telemedicine, data analytics, |

|

Factory AI Data Center |

2.3 |

47.9% |

Medium |

Self-operated |

Low |

Manufacturers |

Supply Chain Optimization, Predictive Maintenance, Process Control |

Source: Research and Markets, Nvidia, Khaveen Investments

To illustrate the market sizes of each data center class, we compiled the market revenues and forecast CAGR of each data center class based on Research and Markets. Based on the table above, cloud computing is the largest ($359 bln) as it consists of major cloud service providers including AWS, Azure and Google Cloud. this is followed by Enterprise Data Centers. Overall, the combined market size of the 6 data center classes is worth around $491 bln. However, the new data center classes, Factory AI and edge data center, have the highest CAGR of 47.9% and 17% respectively.

Supercomputing Data Center

Firstly, supercomputing data centers which are computers with much higher computational capacities supporting intensive applications such as

HPC, quantum mechanics, weather forecasting, oil and gas exploration, molecular modeling, physical simulations, aerodynamics, nuclear fusion research.

In 2021, Nvidia claimed that 70% of the TOP500 supercomputers in the world are powered by its accelerators and it’s even higher at 90% for new systems. The company had remarkable growth in this area over the past 10 years from 34% share of the TOP500 systems in 2011. For example, the company’s GPUs power the fastest supercomputers in the U.S. and Europe like the Oak Ridge National Labs’ Summit, the world’s smartest supercomputer. The company has recently introduced its H100 GPUs based on its Hopper architecture which follows its A100 GPUs based on its Ampere architecture. Supercomputers are equipped with a large number of GPUs, previously Nvidia stated that 6 supercomputers used a total of 13,000 A100 GPUs.

Enterprise Data Center

Besides supercomputers, the company also targets enterprise systems. According to Cisco, compared to other types of data centers, enterprise data centers are built and operated by companies within their premises and optimized for their users to support their data and storage requirements by companies in various industries such as IT, financial services, and healthcare. However, in comparison, hyperscale data centers have higher compute capacities. Based on Nvidia, its NVIDIA-Certified System

enable enterprises to confidently deploy hardware solutions that securely and optimally run their modern accelerated workloads.

The company’s Nvidia-certified data center partners include the top server providers such as Lenovo (OTCPK:LNVGY), Fujitsu (OTCPK:FJTSF), Dell (DELL), Cisco (CSCO), and HPE (HPE), with a combined market share of over 38% of the server market based on the IDC. Also, the company introduced its EGX for enterprise as well as edge computing.

Hyperscale Data Centers

Moreover, Nvidia also targets hyperscale data centers which are massive facilities exceeding 5,000 servers and 10,000 square feet according to the IDC. They are “designed to support robust and scalable applications” due to their agility to scale up or down to meet customers’ demands by adding more computing power to their infrastructure. For example, companies which operate these facilities include Yahoo, Facebook (META), Microsoft (MSFT), Apple (AAPL), Google (GOOG, GOOGL) and Amazon (AMZN). According to Vertiv, there were more than 600 hyperscale data centers in 2021. Nvidia has “ready-to-use system reference designs” based on its GPUs such as its HGX product for hyperscale and supercomputing data centers.

Cloud Computing

Additionally, the company also underline cloud computing data centers, allowing customers and developers to leverage Nvidia’s hardware through the cloud to support applications such as advanced medical imaging, automated customer service, and cinematic-quality gaming. According to Microsoft, cloud computing is the delivery of computing services over the internet with services such as IaaS, PaaS and SaaS with use cases including creating cloud-native applications, streaming and data analytics. Besides that, Nvidia has partnerships with major cloud service providers including Amazon, the market leader in the cloud infrastructure market with a 33% market share in 2021 according to Canalys, trailed by Microsoft Azure, Google Cloud and Alibaba Cloud (BABA, OTCPK:BABAF). These cloud providers are also part of the company’s partner ecosystem.

And now, with NVIDIA’s GPU-accelerated solutions available through all top cloud platforms, innovators everywhere can access massive computing power on demand and with ease. – Nvidia

AI Factory

In addition to these 4 classes of data centers, the company also highlighted the first new data center class which is “AI Factory.” According to CEO Jensen Huang, manufacturers are becoming “intelligence manufacturers” processing and refining data. The company highlighted its GPU-accelerated computing for applications leveraging AI including Supply Chain Optimization, Predictive Maintenance and Process Control for operations optimization improved time-to-insight and lower cost. According to Nvidia’s CEO, the company highlighted 150,000 factories refining data, creating models and becoming intelligence manufacturers. The company has its AGX platform for autonomous machines. For example, one customer of the company is BMW which is using its hardware and software for its robotics and machinery.

The idea is to equip BMW’s factory with all manner of Nvidia hardware. First, the company will use Nvidia’s DGX and Isaac simulation software to train and test the robots; Nvidia Quadro ray-tracing GPUs will render synthetic machine parts. – Nvidia CEO

Edge Data Center

Lastly, the company also highlighted edge data centers which are smaller data centers that are closer to end-users for lower latency and greater speed benefits according to Nlyte Software. Nvidia highlighted that edge data centers span a wide range of applications such as “warehouse, retail stores, cities, public places, cars, robots”. Compared to cloud computing where data is sent from the edge to the cloud, edge computing refers to data computed right at the edge. The company’s EGX for enterprise and edge computing. Based on the company, its NVIDIA EGX and Jetson solutions

accelerate the most powerful edge computing systems to power diverse applications, including industrial inspection, predictive maintenance, factory robotics, and autonomous machines.

Furthermore, we updated our revenue projection for Nvidia’s data center segment in the table below from our previous analysis based on its data center revenue share of the total cloud market capex. To derive this, we forecasted the total cloud market capex based on our projection of the total cloud market from data volume growth forecasts.

|

Volume of Data Worldwide |

2017 |

2018 |

2019 |

2020 |

2021 |

2022F |

2023F |

2024F |

2025F |

2026F |

|

Cloud Infrastructure Market Revenues ($ bln) |

46.5 |

69 |

96 |

129.5 |

178.0 |

248.1 |

349.7 |

485.7 |

679.8 |

951.4 |

|

Cloud Infrastructure Market Revenue Growth % |

45% |

48% |

39% |

35% |

37% |

39% |

41% |

39% |

40% |

40% |

|

Data Volume (ZB) |

26 |

33 |

41 |

64.2 |

79 |

97 |

120 |

147 |

181 |

222.9 |

|

Data Volume Growth % |

44% |

27% |

24% |

57% |

23% |

23% |

24% |

23% |

23% |

23% |

|

Total Market Capex (Adjusted) |

54.3 |

82.8 |

88.0 |

125.7 |

163.9 |

209 |

271 |

344 |

442 |

567 |

|

Total Market Capex Growth % |

30% |

52% |

6% |

43% |

30% |

28% |

29% |

27% |

28% |

28% |

|

Nvidia Data Center Share of Capex Spend |

3.6% |

3.5% |

3.4% |

5.3% |

6.5% |

6.5% |

6.5% |

6.5% |

6.5% |

6.5% |

|

Nvidia Data Center Revenues |

1.9 |

2.9 |

3.0 |

6.7 |

10.6 |

13.6 |

17.5 |

22.3 |

28.6 |

36.7 |

|

Nvidia Data Center Revenues Growth % |

132.5% |

51.8% |

1.8% |

124.5% |

58.5% |

27.7% |

29.2% |

27.3% |

28.3% |

28.3% |

Source: Nvidia, Company Data, Khaveen Investments

Overall, we believe the company’s data center segment outlook is supported by its presence across the 6 types of data centers underlined including supercomputers, enterprise computing, hyperscalers, cloud computing, edge computing and Factory AI. Besides a broad product portfolio catering to each data center class, the company also has partnerships with key customers such as major server vendors and cloud service providers. Based on our revenue projection, we derived an average revenue growth rate of 28.2% for its segment through 2026.

Integrating Software and AI into Data Centers

A data center consists of chips including GPU, central processing unit (CPU), and field-programmable gate array (FPGA) which are some of the commonly used data center chips according to imarc. According to the company, it highlighted the greater compute capabilities of GPUs used as accelerators in data centers running tens of thousands of threads compared to CPUs. According to Network World,

GPUs are better suited than CPUs for handling many of the calculations required by AI and machine learning in enterprise data centers and hyperscaler networks.

According to Ark Invest, CPUs comprised 83% of data center budgets in 2020 but were forecasted to decline to 40% by 2030 as GPUs become the dominant processor.

In its annual report, Nvidia claims to have a platform strategy that brings its hardware, software, algorithms and software libraries together. Furthermore, the company highlighted the introduction of its CUDA programming model which enabled its GPUs with parallel processing capabilities for intensive compute workloads such as deep learning and machine learning.

With our introduction of the CUDA programming model in 2006, we opened the parallel processing capabilities of our GPU for general-purpose computing. This approach significantly accelerates the most demanding high-performance computing, or HPC, applications in fields such as aerospace, bioscience research, mechanical and fluid simulations, and energy exploration. Today, our GPUs and networking accelerate many of the fastest supercomputers across the world. In addition, the massively parallel compute architecture of our GPUs and associated software are well suited for deep learning and machine learning, powering the era of AI. While traditional CPU-based approaches no longer deliver advances on the pace described by Moore’s Law, we deliver GPU performance improvements on a pace ahead of Moore’s Law, giving the industry a path forward. – Nvidia 2022 Annual Report

In addition, as seen in the chart above, the company claims to provide a full stack of AI solutions. Besides its hardware, Nvidia has a collection of AI software solutions and development kits for customers and software developers including Clara Mionai, Riva, Maxine, Nemo and Merlin. Moreover, according to the company, it has

over 450 NVIDIA AI libraries and software development kits to serve industries such as gaming, design, quantum computing, AI, 5G/6G, and robotics.

Furthermore, its products support various AI software frameworks and software such as RAPIDS, TensorFlow and PyTorch. As Nvidia continued to build up its AI stack, the company’s patents had been steadily increasing since 2018 to 1,174 in 2021 based on Global Data. In comparison, AMD’s patents had also been rising since 2017 with a higher number of patents (1,795) while Intel’s patent filings had been declining but have the most number of patents (11,677).

Additionally, the company had introduced its standalone enterprise software offering including NVIDIA AI Enterprise which is $1,000 per node and has 25,000 enterprises already using its technology for AI. According to the company, it had a server installed base of 50 mln enterprises and a TAM of $150 bln for its Enterprise AI software based on its Investor Day Presentation. To determine the share of TAM we expect Nvidia to derive, we compared it against AMD and Intel in terms of its breadth of products, AI software integrations, GPU and CPU performance and price. We ranked the best company for each category with a weight of 0.5 followed by 0.3 for the second-best and 0.2 for the last company and calculated its average weight as our assumption for each company’s share of the TAM.

|

Competitive Positioning |

Nvidia |

Intel |

AMD |

|

Number of products |

7 |

5 |

4 |

|

Software AI Integrations |

21 |

18 |

7 |

|

Average Data Center CPU Benchmark |

N/A |

34,237 |

76,308 |

|

Average Data Center CPU Price |

N/A |

$ 2,277 |

$ 3,843 |

|

GPU Performance (TFLOPS) |

60 |

N/A |

47.9 |

|

GPU Price |

$36,405 |

N/A |

$ 14,500 |

|

Competitive Positioning |

Nvidia |

Intel |

AMD |

|

Number of products |

0.5 |

0.3 |

0.2 |

|

Software AI Integrations |

0.5 |

0.3 |

0.2 |

|

Average Data Center CPU Benchmark |

0.2 |

0.5 |

0.3 |

|

Average Data Center CPU Price |

0.2 |

0.5 |

0.3 |

|

GPU Performance (TFLOPS) |

0.5 |

0.2 |

0.3 |

|

GPU Price |

0.3 |

0.2 |

0.5 |

|

Weights |

0.37 |

0.33 |

0.30 |

Source: Nvidia, Intel, AMD, WFTech, Khaveen Investments

Based on the table, Nvidia has the broadest product breadth between AMD (4) and Intel (5) with 7 products as the company product offerings include GPUs and DPUs as well as reference design systems such as AGX, HGX, EGX and DGX. Also, it is planning to introduce CPUs based on Arm architecture. In comparison, Intel follows behind with its portfolio of ASICs, FPGAs, GPUs, CPUs and Smart NICs while AMD has FPGAs (Xilinx), CPUs, GPUs and DPUs. Furthermore, by referring to these companies’ AI presentation pitch decks and websites, we found that Nvidia has the highest AI software integrations (21) with its broad collection as stated above in addition to its cloud deployment and infrastructure optimization including Nvidia GPU Operator, Network Operator, vGPU, MagnumIO, CUDA-AI and vSphere integration as part of its AI Enterprise package. As Nvidia’s CPU and Intel’s GPU have yet to launch, we ranked it as the lowest with N/A for our calculations.

In terms of hardware, we compared Intel and AMD data center CPUs from our previous analysis of Intel where we determined AMD’s performance advantage based on its higher benchmark score but with premium pricing compared to Intel. Additionally, we compared Nvidia’s H100 GPU based on its performance as measured by its TFLOPS specs with a higher maximum of 60 TFLOPS compared to AMD’s Instinct M250. Though, Nvidia’s GPU has a higher estimated price compared to AMD.

|

Nvidia Enterprise AI Software Revenue ($ bln) |

2021 |

2022F |

2023F |

2024F |

2025F |

2026F |

2027F |

2028F |

|

Market TAM |

150 |

|||||||

|

Nvidia Enterprise AI Software |

0.03 |

0.1 |

0.2 |

0.7 |

2.0 |

6.1 |

18.3 |

55 |

|

Growth % |

200% |

200% |

200% |

200% |

200% |

200% |

200% |

Source: Nvidia, Khaveen Investments

Overall, we determined that Nvidia edged out Intel and AMD with the highest competitive positioning with an average weightage for Nvidia at 37% which we used as our assumption for its share of the Enterprise AI software TAM. Based on the company’s $150 bln TAM as highlighted from its Investor Day, we estimated its revenue opportunity to be $55 bln growing at a CAGR of 200% from 2021 (calculated based on its average cost of $1,000 and 25,000 existing customers) which we believe is not unreasonable given the expected rise of AI which could contribute $15.7 tln in economic output by 2030 according to PwC.

$10 billion Arm CPU Opportunity in Data Centers

Furthermore, the company had recently introduced its Arm-based Grace CPU for data centers. In terms of specifications, it features 144 CPU cores, 1TB/s LPDDR5X and is connected coherently over NVLink®-C2C. The company also announced that multiple hardware vendors, including ASUS (OTC:AKCPF), Foxconn Industrial Internet, GIGABYTE, QCT, Supermicro and Wiwynn will build Grace-based systems that will start shipping in H1 2023. Additionally, the company had previously secured the Swiss National Supercomputing Centre, which has a budget of around $25 mln (fulfills 8% of forecasted Nvidia CPU revenue in 2023), as a customer for its CPUs and GPUs to provide 20 exaflops of AI performance.

According to Omdia, 5% of servers shipped had Arm CPUs which is an increase compared to 2.5% in 2020. According to Softbank (OTCPK:SFTBY), the market share of Arm-based CPUs was forecasted to increase to 25% by 2028. We estimated the x86 data center CPU market size based on Intel’s DCG segment had revenues of $22.7 bln with a market share of 94.1% in 2021 based on Passmark. We then estimated the total data center CPU market size based on Arm’s market share of 5% by Omdia to derive the total data center CPU market which we forecasted to grow at a CAGR of 10.2% by 2028. Assuming the share of Arm CPUs increases to 25% by 2028 based on Softbank’s forecast, we derive the total Arm CPU market size of $12.5 bln in 2028.

|

Arm CPU Market Projections ($ bln) |

2021 |

2022F |

2023F |

2024F |

2025F |

2026F |

2027F |

2028F |

|

x86 Data Center CPU share |

95% |

94% |

92% |

90% |

87% |

84% |

80% |

75% |

|

Arm Data Center CPU Share |

5% |

6.3% |

7.9% |

10.0% |

12.5% |

15.8% |

19.9% |

25% |

|

Arm Data Center CPU Share CAGR |

25.8% |

25.8% |

25.8% |

25.8% |

25.8% |

25.8% |

||

|

x86 Data Center CPU market size |

24.1 |

26.2 |

28.4 |

30.6 |

32.8 |

34.8 |

36.4 |

37.6 |

|

Growth % |

8.7% |

8.3% |

7.8% |

7.0% |

6.1% |

4.9% |

3.1% |

|

|

Arm Data Center CPU market size |

1.3 |

1.8 |

2.4 |

3.4 |

4.7 |

6.5 |

9.0 |

12.5 |

|

Growth % |

38.7% |

38.7% |

38.7% |

38.7% |

38.7% |

38.7% |

38.7% |

|

|

Total |

25.4 |

28.0 |

30.8 |

34.0 |

37.4 |

41.3 |

45.5 |

50.1 |

|

Growth % |

10.20% |

10.20% |

10.20% |

10.20% |

10.20% |

10.20% |

10.20% |

Source: Intel, Omdia, Softbank, BlueWeave Consulting, Khaveen Investments

Companies such as Amazon, Ampere and Huawei had been developing Arm-based CPUs for servers. However, Amazon Graviton processors and Huawei’s Kunpeng chips are used in their own data centers in comparison to Nvidia. Based on a comparison of their specifications against Nvidia, Nvidia’s CPU offer a superior core count (144) compared to Ampere Altra Max (128), Amazon Graviton3 (64) and Huawei Kunpeng 920 (64). In terms of product and software integration, according to Nvidia, the Grace CPU will support its HPC software development kit and a full suite of CUDA libraries.

|

Nvidia Arm CPU Revenue ($ bln) |

2023F |

2024F |

2025F |

2026F |

2027F |

2028F |

|

Share of TAM |

1% |

4.8% |

8.6% |

12.4% |

16.2% |

20% |

|

Nvidia CPU Revenue |

0.31 |

1.63 |

3.22 |

5.12 |

7.37 |

10.02 |

|

Growth % |

429.0% |

97.4% |

58.9% |

44.0% |

36.0% |

Source: Khaveen Investments

All in all, we expect Nvidia’s introduction of its Arm CPU to support its data center segment growth as the company had already secured system hardware partners to build Grace CPU-based systems in H1 2023 and supercomputer customers. Additionally, we believe the company could be supported by its performance advantage with its 144 core CPU which is higher than its competitors as well as integrated with its other AI software.

To project Nvidia’s CPU revenue, we assumed its share to rise 20% of our estimated market size by 2028 from 1% in 2023 assuming it releases its CPU as planned. We based our assumption of a 20% market share as we believe it could be faced with not only competitors such as Ampere but also AMD as its CFO indicated that it could embrace Arm CPUs and already had used Arm cores in other products such as microcontrollers while Intel plans to make Arm-based chips with its foundry for customers. This translates to average revenue growth of 133.1% for the company.

Risk: Competition from Intel

In addition to competition from AMD, Nvidia could face greater competition as Intel introduced its data center GPUs. While Intel (INTC) has not established itself in the discrete GPU market despite leading the total GPU market with its integrated GPUs, we believe the company could pose a significant threat to Nvidia. This is because Intel dominated the data center CPU market with a 94% market share in 2021 based on PassMark. We believe this could provide Intel with an opportunity to leverage its relationships with key data center customers with cross-selling opportunities. That said, as covered in our previous analysis, we also expect Advanced Micro Devices (AMD) to gain market share against Intel with its performance competitive advantages from its CPU portfolio.

Valuation

We summarized our revenue projections for the company’s Data Center segment in the table below. Whereas for its other segments, we retained our projections based on our previous analysis. Compared to our previous analysis, our revised revenue projections have a higher average revenue growth forecast of 28.3% compared to 23.4% in our previous analysis driven by higher revenue growth in its Data Center segment at an average of 33.6% compared to 21.9% previously.

|

Nvidia Revenue Projections ($ bln) |

2021 |

2022F |

2023F |

2024F |

2025F |

|

Gaming |

12,462 |

15,953 |

20,421 |

26,141 |

33,463 |

|

Professional Visualization |

2,111 |

2,318 |

2,545 |

2,794 |

3,068 |

|

Data Center |

10,613 |

13,632 |

18,051 |

24,606 |

33,858 |

|

Automotive |

566 |

691 |

842 |

1,028 |

1,254 |

|

OEM and Other |

1,162 |

1,162 |

1,162 |

1,162 |

1,162 |

|

Total |

26,914 |

33,755 |

43,022 |

55,731 |

72,806 |

|

Growth % |

61.4% |

25.4% |

27.5% |

29.5% |

30.6% |

Source: Nvidia, Khaveen Investments

We valued the company based on a DCF analysis as we continue to expect it to generate positive FCFs. We updated our terminal value of the average chipmaker EV/EBITDA to 18.44x from 23.9x previously.

SeekingAlpha, Khaveen Investments

Based on a discount rate of 13.3% (company’s WACC), our model shows its shares are undervalued by 99.58%.

Verdict

To conclude, we expect the company’s data center segment’s segment outlook to be supported by its presence across the 6 data center classes including supercomputers, enterprise computing, hyperscalers, cloud computing, edge computing and Factory AI with its broad hardware solutions and partnerships with key customers. Additionally, with its full stack of AI solutions, we expect the company to leverage its competitiveness to expand with its Enterprise AI software with an estimated revenue opportunity of $55 bln by 2028. Lastly, with the planned launch of its Arm CPU by 2023, we forecasted its revenue opportunity of $10 bln by 2028 based on a 20% market share assumption.

Overall, we revised our revenue growth projections for the company with a higher average of 28.3% compared to 23.4% previously driven by higher data center segment growth from 21.9% to 33.6%. However, we obtained a lower price target with a lower EV/EBITDA average of 18.44x from 23.4x previously as well as a higher discount rate. Though, Nvidia’s stock price had declined by 51% YTD which we believe presents an attractive upside for the company. Overall, we rate the company as a Strong Buy with a target price of $289.85.

Be the first to comment